An implementation in Scala with Akka actors.

If you want to enjoy fast transaction rates, Stellar is a great choice. But if you want to squeeze even more transactions per second out of the network, a little extra work goes a long way. This article describes a well-defined pattern for high throughput and outlines an implementation using technologies specifically geared towards highly-concurrent processing.

Stellar is Fast, but there’s a limit.

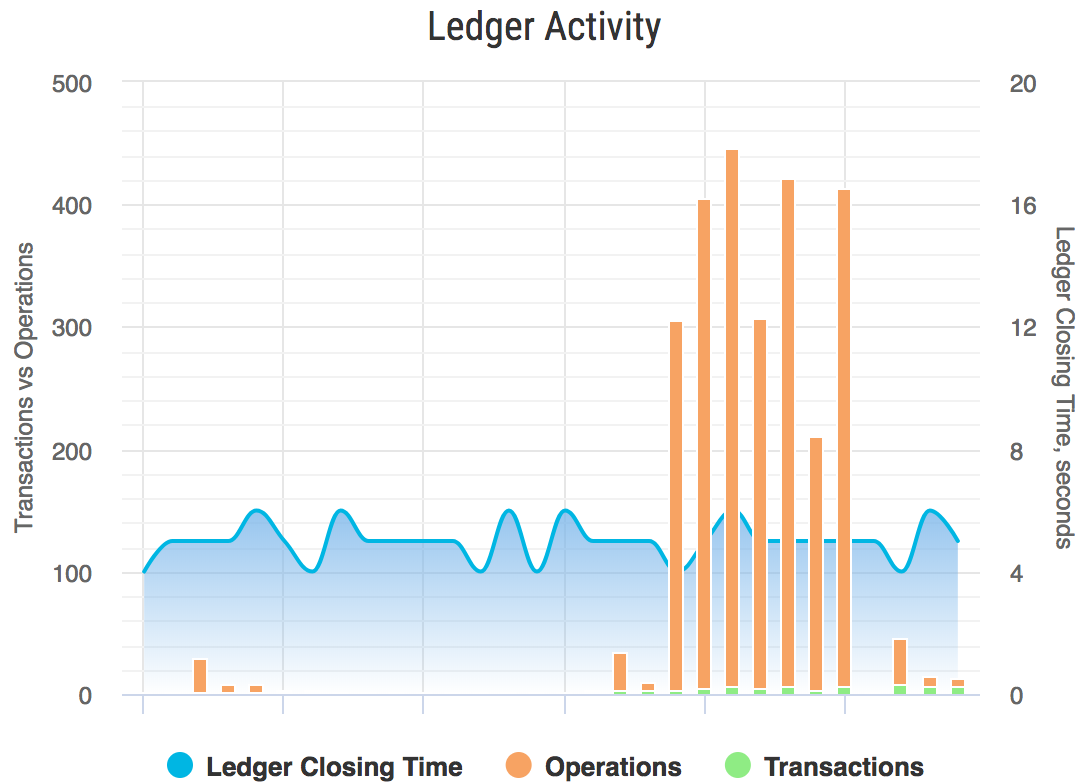

One of Stellar’s many strengths is its fast transaction rate. While other public networks have clearing times in the order of minutes or even hours, Stellar consistently averages 5 seconds for the clearing of a ledger.

That’s pretty fast. But there’s a catch if you want to do more than one transaction at a time. As each transaction is submitted to be included in the ledger, there’s no way of guaranteeing their order of arrival. If you sent even just two transactions in the same ledger, there’s a reasonable chance they will arrive out of sequence. This is a problem, because the network will reject sequence numbers that are not contiguous for an account.

Whilst we can enjoy very fast clearing times, it seems the only way to ensure transactions are not rejected for being out of sequence is to wait for an acknowledgement before sending the next. This severely limits our potential transaction rate. The network enforces sequential processing for good reasons – even when it doesn’t necessarily apply to our use-case. How can we work around this restriction?

Channelling your efforts

The Channel design pattern is the documented solution. In this pattern a collection of temporary accounts act as proxies, each transacting operations on behalf of a primary account concurrently.

Imagine, as an analogy, that you were never allowed to send an email until you’d received a response to your previous one. In such a world, it might take you days to send a dozen emails. Goodbye productivity! But what if you could hire new people and have each of them send one message each on your behalf? Assuming nothing went awry, all your messages would be sent immediately.

It’s possible to do this in Stellar. Any account can transact operations on behalf of another. The issued transactions need only be sequential relative to the account that is submitting it, but the operations contained within those transaction can originate from any account.

Digging our channel

How would such an implementation look? Ideally, we would like a model that encapsulated the full lifecycle of a channel. There’s a bit to consider.

- How wide should the channel be (i.e. how many channel accounts)?

- How do the channel accounts get funded?

- How do we distribute the work within the channel between accounts?

- How do we close the channel and recoup the left-over funds?

Additionally, as a transaction may contain up to 100 operations, it is not ideal to send just one operation per transaction. So:

- How can we buffer operations within a transaction?

I thought it was a fun challenge and decided to implement a Stellar Channel using the Akka actor library and the Scala SDK. This stack allowed me to write a highly concurrent implementation with minimal fuss. (The code samples in this article have been edited to clearly illustrate the concepts even for readers who are not familiar with either of these tools. The full source is available on GitHub).

The target

Because we would like to encapsulate the complexity of channels, the resulting client code must be simple.

<p> CODE: https://gist.github.com/kylemccollom/bd413eea582df0da0c7843697026c8a6.js</p>

This defines a channel that will transact on behalf of primary account on the test network via 24 proxy accounts. We then instruct the channel to pay a recipient account 1 XLM, two thousand times. Finally, we close the channel by sending it the Close message, which merges the channel accounts and their funds back into the primary account.

Let’s explore what happens inside the channel at startup.

Turn on

As the Channel actor starts up we create the proxy accounts by generating the account keys and issuing a single transaction to fund them all.

<p> CODE: https://gist.github.com/kylemccollom/1c3b52f015ee662913dc7dcee9a99eb4.js</p>

Not only do the proxy accounts need the minimal funding in order to be created (1 XLM), but they will also need additional funds to cover the transaction fees. In this case we are providing 2 XLM per account, which will allow for 100,000 transactions per proxy account.

To ensure that work is distributed evenly between the proxy accounts, lets facade them behind an Akka Router, specifying that it use RoundRobinRoutingLogic. As these class names suggest, this will provide a single routing point that will be responsible for forwarding any operations it receives to the proxy accounts in a round-robin fashion.

<p> CODE: https://gist.github.com/kylemccollom/f21e84b153c3578fc0ae898cbf762fe3.js</p>

Each of the proxy accounts is represented by a ChannelAccount actor.

Tune in

With the channel established, it’s now trivial to submit operations for them to be included in parallel transactions.

<p> CODE: https://gist.github.com/kylemccollom/561874e85dafada1b7794cadc7a20d69.js</p>

When the Channel receives a Pay request, it simply forwards it to the router. The router will choose the next available ChannelAccount and forward the message to it.

<p> CODE: https://gist.github.com/kylemccollom/c9e83e857c59ea82796af9b2beb6d6b5.js</p>

The ChannelAccount converts the request into an Operation and, crucially, ensures that the source of the operation is the primary account.

Rather than immediately transact requests, the `ChannelAccount` will batch for a short period of time in order to find a good balance between timeliness & transaction size.

<p> CODE: https://gist.github.com/kylemccollom/5b371e58dd3f0dfbfe33841b93ecd983.js</p>

When a request arrives:

- If the batch is empty the ChannelAccount schedules a future reminder to itself to flush all the pending operations.

- But if the request is the last that can fit in a batch it will transact early.

- Otherwise, it’s just adding another operation to the batch in memory while otherwise waiting for the reminder message to flush the batch.

<p> CODE: https://gist.github.com/kylemccollom/2336c34f1898fb5dcce7cb50082a2754.js</p>

The scheduled message to Flush, when it arrives, is treated just the same as when the batch is full. We immediately transact and clear the batch.

<p> CODE: https://gist.github.com/kylemccollom/17fcf621e208ecc386bda037df7f7856.js</p>

Sending the batch involves creating a new Transaction from the channel account and folding all of the batched operations to it. Importantly the transaction is signed by both the main (paying) account and the channel account, otherwise it would be rejected by the network.

Drop out

When the Channel receives a Close message, it’s time to merge all of the channel accounts back into the primary account.

<p> CODE: https://gist.github.com/kylemccollom/4805aab0f513b7599e934f1a1d035d0a.js</p>

Wrapping a Close message in a Broadcast tells the router to forward the message to every channel account simultaneously.

<p> CODE: https://gist.github.com/kylemccollom/4fc94346433bdf06fff8cf388232e6f7.js</p>

On receipt of the close message from the router, the channel accounts will submit a final transaction to merge themselves back into the primary account.

How fast does it flow?

In this example, running on my dinky laptop, I processed 2000 operations in a channel 24 accounts wide. Each account did a single transaction of ~84 operations. Over multiple test runs, these batches were consistently processed over approximately 7 ledgers without error.

Building it up

This exploration into the capabilities of the channel pattern showed that large quantities of operations can be pooled and transmitted in a short time-frame. As it stands, this implementation is a proof of concept that lays the foundation for a more robust solution. A number of interesting potential enhancements can be made based upon it, depending on your project requirements. For example:

- Multi-owner channels. Channels don’t need to operate for a single primary account. Each transaction could include operations with a mix of different sources. Primary accounts could attach and detach themselves from the channel on demand.

- Scalable transaction fees. If the network is congested, the channel could automatically scale up the transaction fee so that it receives priority service.

- Automatic refill. Channel accounts spend transaction fees and will eventually reach the reserve balance. They could stay alive by refilling themselves from the funds of the primary account(s).

- Reactive Streams. The Actor implementation brings with it the baggage of boilerplate code to coordinate different phases of the actor lifecycles. A solution based on Akka Streams would be more succinct and even more performant.

Conclusion

Channels are a simple and effective pattern for overcoming the sequential processing limitations and achieving higher volumes on the Stellar network. Efficient implementations can be achieved by modeling their startup, active and tear-down phases on platforms that have first-class support for concurrent processing.

I am always interested in hearing from other Lumenauts on Stellar projects and related topics. Why not share your thoughts in #dev on Slack or contact me directly.